The FSM has been recognised as an institution of regulated self-regulation recognised by NetzDG.

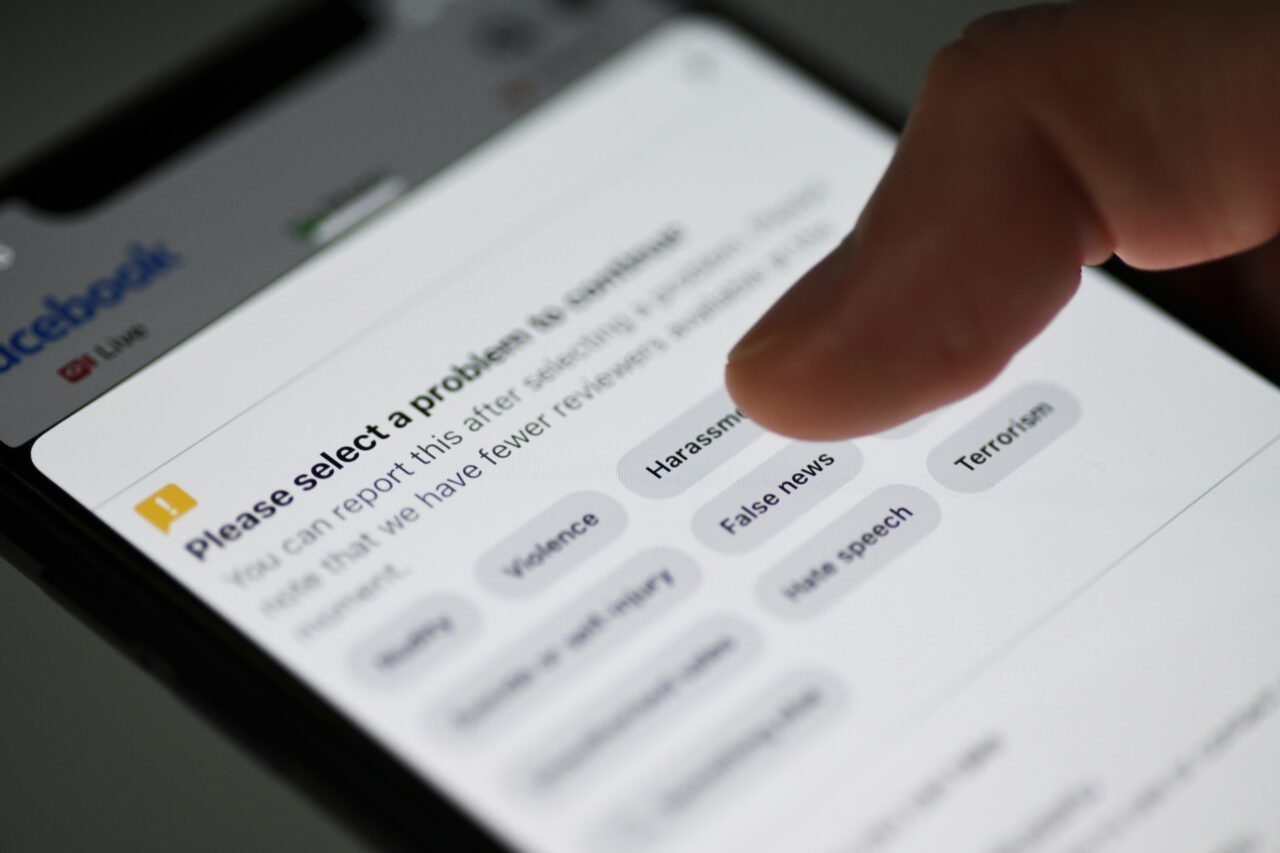

The Network Enforcement Act (NetzDG) came into force in 2017 and primarily intended to combat hate and fake news in social networks such Facebook, Twitter or YouTube. According to the law, online networks should delete illegal content. Additionally the NetzDG also provides for the possibility that social networks transfer the decision on the illegality of content to a state-recognised institution of regulated self-regulation. Since 2020 the FSM is one of these institutions.

As the NetzDG will be repealed with the Digital Services Act, the FSM has ceased its work in this area at the end of June 2023. Although platform providers can still turn to third parties such as the FSM when assessing the legality of content under the new legal situation, this option is not explicitly enshrined in the law and therefore does not offer the same kind of legal certainty or privilege.

The FSM is the first and only institution of regulated self-regulation recognised by NetzDG.

Provider’s legal obligations

The NetzDG defines extensive obligations for social networks – based on a total of 21 criminal offences. Online networks should delete obviously illegal content after 24 hours. Non-obvious illegal content must be deleted after 7 days.

In addition to a semi-annual report, the NetzDG also requires a transparent und suitable complaints procedure for all users.

Systematic violations can be punished with fines of up to 50 million euros. The Federal Office of Justice, which is responsible for imposing fines under the law, has drawn up guidelines on fines.

External expert committee decides

controversial legal issues

Until the end of June 2023 full members of the FSM had the possibility, in more difficult cases, to consult an external body which decided on the illegality of the reported content. The external expert committee, the Review Panel consisted of lawyers who decided independently.

If the providers used this option, they were bound by the decisions of the self-regulatory body and must then take the appropriate measures.

Decisions of the NetzDG Review Panel

The NetzDG Review Panel decided whether a complaint is justified, and if so, which kind of offence had been committed. A total of 230 decisions were made by the committees from 2020 to 2023. In 2022 the committees made 98 decisions, of which 35 cases have been unlawful.

All decisions of the FSM are published in anonymous form in German.

Entscheidungen filtern nach:

Leider sind zu dieser Auswahl keine Entscheidungen vorhanden.